[THE 2021 CLOUD REPORT IS AVAILABLE. READ IT HERE]

Since 2017, Cockroach Labs has run thousands of benchmark tests across dozens of machine types with the goal of better understanding performance across cloud providers. If there’s one thing we’ve learned in our experiments, it’s this: benchmarking the clouds is a continuous process. Since results fluctuate as the clouds adopt new hardware, it’s important to regularly re-evaluate your configuration (and cloud vendor).

In 2017, our internal testing suggested near equitable outcomes between AWS and GCP. Only a year later, in 2018, AWS outperformed GCP by 40%, which we attributed to AWS’s Nitro System present in c5 and m5 series. So: did those results hold for another year?

Decidedly not. Each year, we’re surprised and impressed by the improvements made across cloud performance, and this year was no exception.

DOWNLOAD THE 2020 CLOUD REPORT

We completed over 1,000 benchmark tests (including CPU, Network Throughput, Network Latency, Storage Read Performance, Storage Write Performance, and TPC-C), and found that the playing field looks a lot more equitable than it did last year. Most notably, we saw that GCP has made noticeable improvements in the TPC-C benchmark such that all three clouds fall within the same relative zone for top-end performance.

The 2020 Cloud Report report expands upon learnings from last year’s work, comparing the performance of AWS, GCP, and new-to-the-report Azure on a series of microbenchmarks and customer-like-workloads to help our customers understand the performance tradeoffs present within each cloud and its machine

What’s New in the 2020 Cloud Report?

In the 2020 report, we've expanded our research. We:

Added Microsoft Azure to our tests

Expanded the machine types tested from AWS and GCP

Open-sourced Roachprod, a microbenchmarking tool that makes it easy to reproduce all microbenchmarks

You might be wondering, why the jump from 2018 to 2020? Did we take a year off? We’ve rebranded the report to focus on the upcoming year. So, like the fashion or automobile industries, we will be reporting our findings as of Fall 2019 for 2020 in the 2020 Cloud Report.

How We Benchmark Cloud Providers

CockroachDB is an OLTP database, which means we’re primarily concerned with transactional workloads when benchmarking cloud providers. Our approach to benchmarking largely centers around TPC-C. This year, we ran three sets of microbenchmark experiments (also using open-source tools) in the build-up to our TPC-C tests.

In our full report, you can find all our test results, (and details on the open-source tools we used to benchmark them), including:

CPU (stress-ng)

Network throughput and latency (iPerf and ping)

Storage I/O read and write (sysbench)

Overall workload performance (TPC-C)

TPC-C Performance

We test workload performance by using TPC-C, a popular OLTP benchmark tool that simulates an e-commerce business, given our familiarity with this workload. TPC-C is an OLTP benchmark tool that simulates an e-commerce business with a number of different warehouses processing multiple transactions at once. It can be explained through the above microbenchmarks, including CPU, network, and storage I/O (more details on those in the full report).

TPC-C is measured in two different ways. One is a throughput metric, throughput-per-minute type C (tpmC) (also known as the number of orders processed per minute). The other metric is the total number of warehouses supported. Each warehouse is a fixed data size and has a max amount of tpmC it’s allowed to support, so the total data size of the benchmark is scaled proportionally to throughput. For each metric, TPC-C places latency bounds that must be adhered to in order to consider a run “passing”. Among others, a limiting passing criteria is that the p90 latency on transactions must remain below 5 seconds. This allows an operator to take throughput and latency into account in one metric. Here, we consider the maximum tpmC supported by CockroachDB running on each system before the latency bounds are exceeded.

In 2020, we see a return to similar overall performance in each cloud.

Each result above is the maximum tpmC produced by that cloud and machine type when holding the p90 latency below 5 seconds. This is the passing criteria for TPC-C and has been applied throughout any run of TPC-C data in this report.

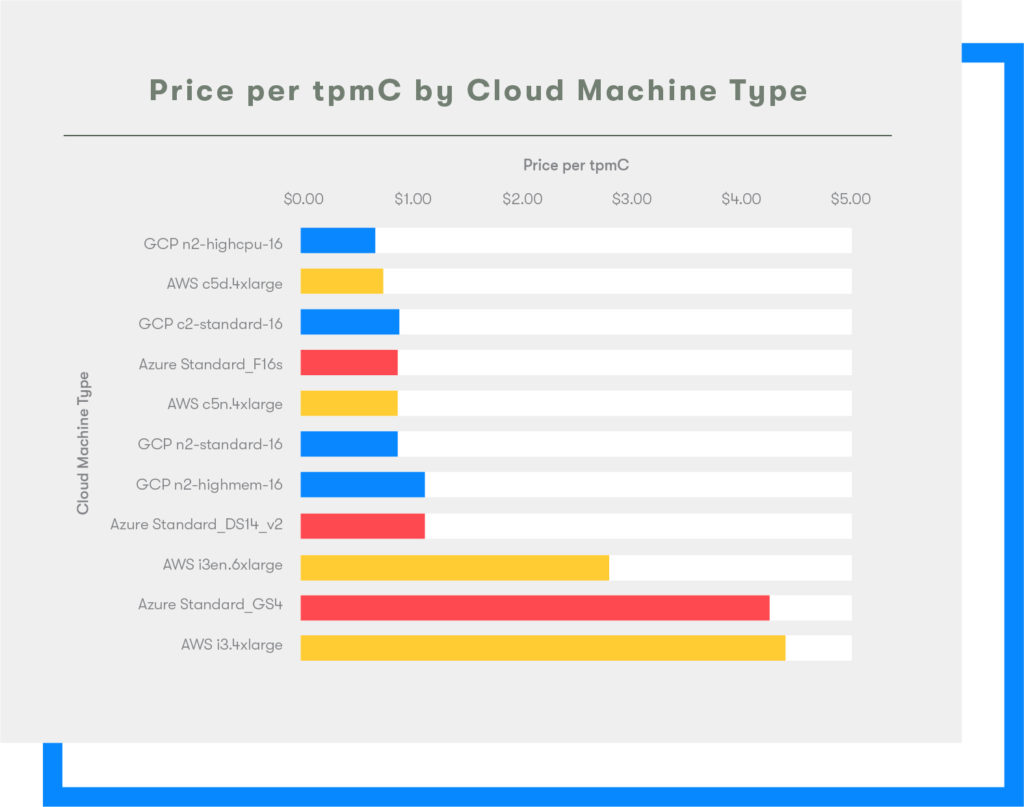

TPC-C Performance per Dollar

Efficiency matters as much as performance. If you can achieve top performance but have to pay 2x or 3x, it may not be worth it. For this reason, TPC-C is typically measured in terms of price per tpmC. This allows for fair comparisons across clouds as well as within clouds. In this analysis, we use the default on-demand pricing available for each cloud because pricing is an extremely complex topic. GCP, in particular, was keen to note that a true pricing comparison model would need to take into account on-demand pricing, sustained use discounts, and committed use discounts. While it’s true that paying up-front costs is expensive, we applied this evenly across all three clouds.

We recommend exploring various permutations of these pricing options depending upon your workload requirements. Producing a complex price comparison across each cloud would be a gigantic undertaking in and of itself, and we believe that Cockroach Labs is not best positioned to offer this kind of analysis.

To calculate these metrics we divided max tpmC observed by 3 years of running each cloud’s machine type (i.e., on-demand hourly price * 3 *365 *24).

It’s also important to note that these are list prices for all cloud providers. Depending upon the size of your organization--and what your spend is with each provider overall--you may be able to negotiate off-menu discounts.

Again, all three clouds come close on the cheapest price per tpmC. However, this year we see that the GCP n2-highcpu-16 offers the best performance per dollar in the tested machine types. If price is less of a concern, AWS is the best performer on throughput alone, but when is price not a factor?

Reproduction Steps for the 2020 Cloud Report

All benchmarks in this report are open source so that anyone can run these tests themselves. As an open source product, we believe in the mission of open source and will continue to support this mission. We also vetted our results with the major cloud providers to ensure that we properly set up the machines and benchmarks.

All reproduction steps for the 2020 Cloud Report can be found in this public repository. These results will always be free and easy to access and we encourage you to review the specific steps used to generate the data in this blog post and report.

Note: If you wish to provision nodes exactly the same as we do, you can use this repo to access the source code for Roachprod, our open source provisioning system.

Read the Full 2020 Cloud Report

You can download the full report here, which includes all the results, including the lists of the highest performing machines, more details on TPC-C performance, and microbenchmarks for:

CPU

Network Throughput

Network Latency

Storage Read Performance

Storage Write Performance

Happy reading!